Stochastic optimization refers to the utilization of randomness within the objective performance or within the modification formula.

Like high-dimensional nonlinear objective issues, challenging optimization algorithms could comprise multiple native optima within which settled optimization algorithms could embroil.

Stochastic optimization algorithms bestow an alternate technique that permits the best native selections to be developed among the search techniques that will boost the chance of the procedure uncovering the target performer’s worldwide optima.

In this article, you’ll find a useful introduction to Stochastic optimization.

After finishing this article, you’ll know:

- Stochastic optimization algorithms build the use of randomness as a part of the search procedure.

- Examples of Stochastic optimization algorithms like simulated tempering and genetic algorithms.

- Practical issues once victimization Stochastic optimization algorithms like continual evaluations.

What is Stochastic Optimization?

Optimization refers to optimization, algorithms that request the inputs to a performance that terminates in the minimum or most associate degree objective performance.

Stochastic optimization or Stochastic Search refers to associate degree optimization tasks that involve randomness in a way, like either from the target performer or within the optimization algorithm.

Randomness within the reliable performance implies that the calculation of candidate solutions implicates some skepticism or noise, and algorithms should be chosen that may assemble progress within the Search within this noise’s existence.

Randomness within the formula is employed as a technique, e.g., Stochastic or probabilistic selections. There is an alternative to settle the choices in a shot to boost the opportunity to locate the worldwide optima or an enhanced native optima.

It is additionally familiar to examine with associate degree formulas that use randomness than an objective performance that contains noisy trials once discussing Stochastic optimization

This can be as a result of Stochastic randomness within the accurate understanding can be addressed by stochastic victimization randomness within the optimization algorithms. As such, Stochastic optimization could also be spoken as “robust improvement.”

A settled formula could also be misled (e.g., “deceived” or “confused “) by the noisy analysis of candidate solutions or noisy perform gradients, inflicting the formula to bouncing around or more (e.g., fail to converge).

Employing randomness in an optimization algorithm, the formula permits the search procedure to perform well on difficult optimization issues with a nonlinear response surface. This is often achieved by the formula taking domestically suboptimal steps or moves within the search area to flee native optima.

The randomness utilized in a Stochastic optimization, the formula doesn’t have to be compelled to be true randomness; instead, pseudoStochastic is ample. A pseudoStochastic number generator is a sort of universally utilized Stochastic optimization.

The aim of randomness during a Stochastic optimization algorithm doesn’t mean that the formula is random. Instead, it implies that some selections created throughout the search procedure involve some portion of randomness. For instance, we will build mentally this because the move from the existing to forthcoming purpose within the search region developed by the algorithm could also be created consistent with a chance distribution relative to the best activity.

Now that we’ve got a thought of what Stochastic optimization is, let’s investigate some samples of Stochastic optimization algorithms.

Stochastic Optimization Algorithms

The use of randomness within the algorithms usually implies that the techniques are spoken as “heuristic search” as they use a rough rule-of-thumb procedure that will or might not work to seek out the optima rather than a particular process.

Many stochastic algorithms are galvanized by biological or natural action. They should be spoken as “metaheuristics” as a higher-order procedure providing the conditions for a selected search of the target perform. They’re additionally told as “black box” optimization algorithms.

There are several stochastic optimizations, algorithms. Some samples of stochastic optimization algorithms include:

- Iterated native Search

- Stochastic Hill climb

- Stochastic Gradient Descent

- Tabu Search

- Greedy Randomized Adaptive Search Procedure.

Some samples of stochastic optimization, algorithms that are galvanized by biological or physical processes include:

- Simulated tempering

- Evolution methods

- Genetic formula

- Differential Evolution

- Particle Swarm improvement

Now that we tend to be acquainted with some stochastic optimization samples, algorithms, let’s investigate some practical issues once we victimization them.

Practical Issues for Stochastic optimization

There are vital issues once victimization stochastic optimization algorithms.

The procedure’s stochastic nature implies that the associate degree single run of the formula is different, given a distinct supply of randomness utilized by the recipe and, in turn, totally different beginning points for the Search and selections created throughout the Search.

The pseudoStochastic variety generator is there because the supply of randomness will be seeded to confirm a similar sequence of stochastic numbers is provided with every run of the formula. This is often sensible for small demonstrations and tutorials. However, it’s fragile because it is functioning against the inherent randomness of the procedure.

Instead, a given formula will be dead repeatedly to manage for the randomness of the procedure.

This idea of multiple runs of the formula will be utilized in 2 critical situations:

- Comparing Algorithms

- Evaluating the consequence

Algorithms could also be compared to support the relative quality of the result found, the quantity of performing evaluations performed, or some combination or derivation of those issues. The effects of anyone’s run can depend on the randomness utilized by the formula and alone cannot meaningfully represent the formula’s aptitude. Instead, a technique of continual analysis ought to be there.

Any comparison between Stochastic optimization algorithms would force the continual analysis of every algorithm with a distinct supply of randomness and the summarization of the chance distribution of best results found, like the mean and variance of objective values. The mean result from every formula will then be compared.

Similarly, any single run of a selected optimization formula alone doesn’t meaningfully represent the target performer’s worldwide optima. Instead, a technique of continual analysis ought to be accustomed to develop a distribution of best solutions.

The maximum or minimum of the distribution will be taken because of the race murder. Also, the distribution itself can give a degree of reference and confidence that the answer found is “relatively good” or “good enough” given the resources exhausted.

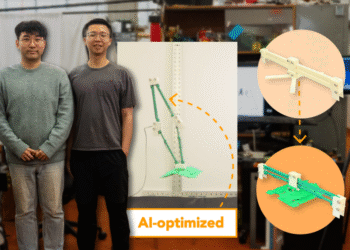

Multi-Restart:

Associate degree approach for raising the chance of locating the worldwide optima via the continual application of stochastic optimization, algorithms to optimization, drawback.

The continual application of a stochastic optimization algorithm associate degree objective performance is typically spoken as a multi-restart strategy and should be in-built to the optimization, formula itself or prescribed additional typically as a procedure round the chosen stochastic optimization algorithm.