NUS researchers have proven that a single transistor can copy both neural and synaptic behaviors, marking a significant step toward brain-inspired computing

Researchers on the National University of Singapore (NUS) have proven that a single, widespread silicon transistor, the core factor of microchips discovered in computer systems, smartphones, and nearly all modern electronics, can mock the functions of both a biological neuron and synapse while operated in a nontraditional manner.

The research, led by using Associate Professor Mario Lanza from the Department of Materials Science and Engineering at NUS’s College of Design and Engineering, offers a promising path toward scalable, energy-efficient hardware for artificial neural networks (ANNs). This improvement marks a significant step forward in neuromorphic computing, a discipline that targets to replicate the brain’s efficiency in processing statistics. The research was posted in Nature on March 26, 2025.

Putting the brains in silicon

The world’s most cosmopolitan computers already present inside our heads. researches show that the human brain is, by and large, more energy-efficient than electronic processors, way to almost 90 billion neurons that shape some 100 trillion connections with each other, and synapses that tune their strength over the time — a method called synaptic plasticity, which underpins learning and memory.

For many years, scientists have pursued to duplicate this efficiency using artificial neural networks (ANNs). ANNs have lately pushed brilliant progresses in artificial intelligence (AI), loosely inspired by how the brain approaches information. But whilst they borrow biological terminology, the resemblances run only skin deep — software based ANNs, which include those powering large language models like ChatGPT, have a voracious urge for computational resources, and therefore, electricity. This makes them impractical for many programs.

Neuromorphic computing objectives to mock the computing power and energy efficiency of the brain. This demands no longer re-designing system architecture to carry out memory and computation at the same region — the so-called in-memory computing (IMC) — however additionally to develop digital devices that take advantage the physical and digital phenomena able to replicating more faithfully how neurons and synapses works. However, recently neuromorphic computing systems are stymied by the need for complex multi-transistor circuits or emerging materials that are yet to be confirmed for big-scale production.

“To allow real neuromorphic computing, where microchips behave like biological neurons and synapses, we need hardware that is both scalable and power-efficient,” stated Professor Lanza.

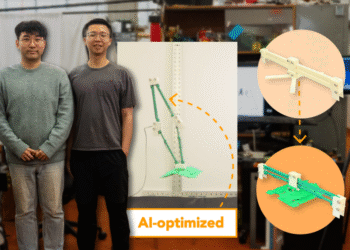

A Breakthrough Using Standard Silicon

The NUS research team has now validated that a single, standard silicon transistor, whilst ordered and operated in a particular way, can replicate both neural firing and synaptic weight adjustments — the essential mechanisms of biological neurons and synapses. This was attained through adjusting the resistance of the bulk terminal to particular values, which permit controlling two physical phenomena taking place into the transistor: punch by effect ionization and change trapping. Moreover, the team constructed a two-transistor cell capable of operating either in neuron or synaptic regime, which the researchers have called “Neuro-Synaptic Random Access Memory”, or NS-RAM.

“Other methods need for complicated transistor arrays or novel materials with unsure manufacturability, but our method uses commercial CMOS (complementary metallic-oxide-semiconductor) technology, the same platform found in modern computer processors and memory microchips,” defined Professor Lanza. “This way it’s scalable, reliable, and compatible with current semiconductor fabrication approaches.”

Through experiments, the NS-RAM cell validated low power intake, maintained strong overall performance over many cycles of operation, and exhibited steady, predictable behavior throughout different devices — all of which are favored explains for constructing reliable ANN hardware ideal for real-world applications. The team’s breakthrough marks a step change in the development of compact, power-efficient AI processors that might allow faster, more responsive computing.