Breakthrough light-powered chip speeds up AI training and decreases energy intake.

Engineers at Penn have evolved the first programmable chip capable to training nonlinear neural networks the usage of light—a first-rate leap forward that might substantially boost up AI training, lower energy intake, and probably lead in completely light-powered computing systems.

Unlike conventional AI chips that depend upon electricity, this new chip is photonic, meaning it plays calculations by using of beams of light. Published in Nature Photonics, the research proves how the chip manipulates light to implement the complex nonlinear operations important for modern artificial intelligence.

“Nonlinear functions are crucial for training deep neural networks,” explains Liang Feng, Professor of Materials Science and Engineering and Electrical and Systems Engineering, and senior author research. “Our goal was to make this happen in photonics for the first time.”

The Missing Piece in Photonic AI

Most AI systems these days depend upon neural networks, software program designed to mimic biological neural tissue. Just as neurons link with allow biological creatures to think, neural networks link together layers of simple units, or “nodes,” permitting AI systems to perform complex tasks.

In both artificial and biological structures, these nodes only “fire” once a threshold is reached — a nonlinear procedure that permits small modifications in input to cause larger, more complex adjustments in output.

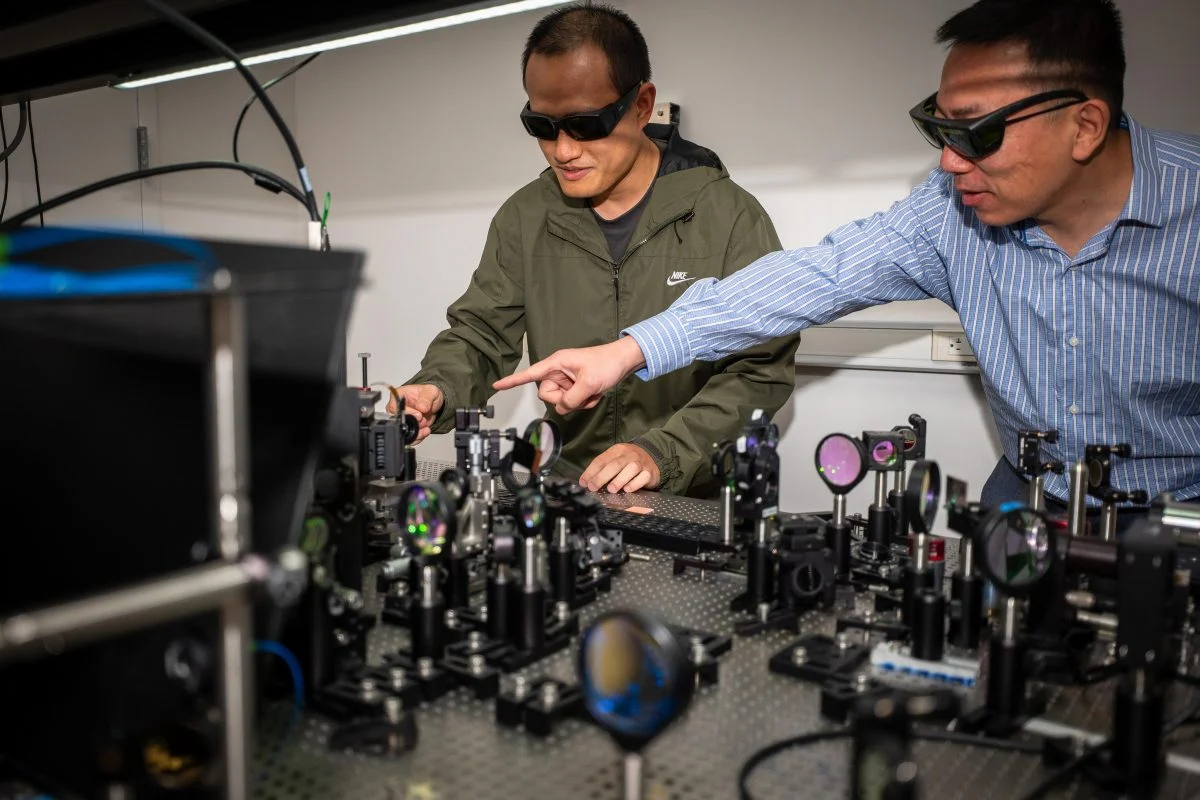

Tianwei Wu and Professor Liang Feng Training AI With Light

Postdoctoral fellow Tianwei Wu (left) and Professor Liang Feng (right) in the lab, demonstrating some of the apparatus used to develop the new, light-powered chip.

Without that nonlinearity, adding layers does nothing: the system just reduce to a single layer linear operation, wherein inputs are simply brought together, and no actual learning happens.

While many research teams, including teams at Penn Engineering, have evolved light-powered chips capable of managing linear mathematical operations, none has solved the task of representing nonlinear functions using of only light — till now.

“Without nonlinear functions, photonic chips can’t train deep networks or perform truly intelligent tasks,” stated Tianwei Wu (Gr’24), a postdoctoral fellow in ESE and the paper’s first author.

Reshaping Light with Light

The team’s step forward starts off with a special semiconductor material that responds to light. When a beam of “signal” light (carrying the input records) passes via the material, a second “pump” beam shines in from above, adjusting how the material reacts.

By converting the shape and intensity of the pump beam, the team can control how the signal light is absorbed, transmitted, or amplified, relying on its depth and the material’s conduct. This procedure “programs” the chip to carry out distinctive nonlinear functions.

“We’re now not converting the chip’s structure,” stated Feng. “We’re using light itself to create patterns within the material, which then reshapes how the light moves through it.”

The end result is a reconfigurable system that may explicit a extensive range of mathematical functions relying at the pump pattern. That flexibility lets in the chip to study in actual time, adjusting its behavior primarily based on comments from its output.

Training at the Speed of Light

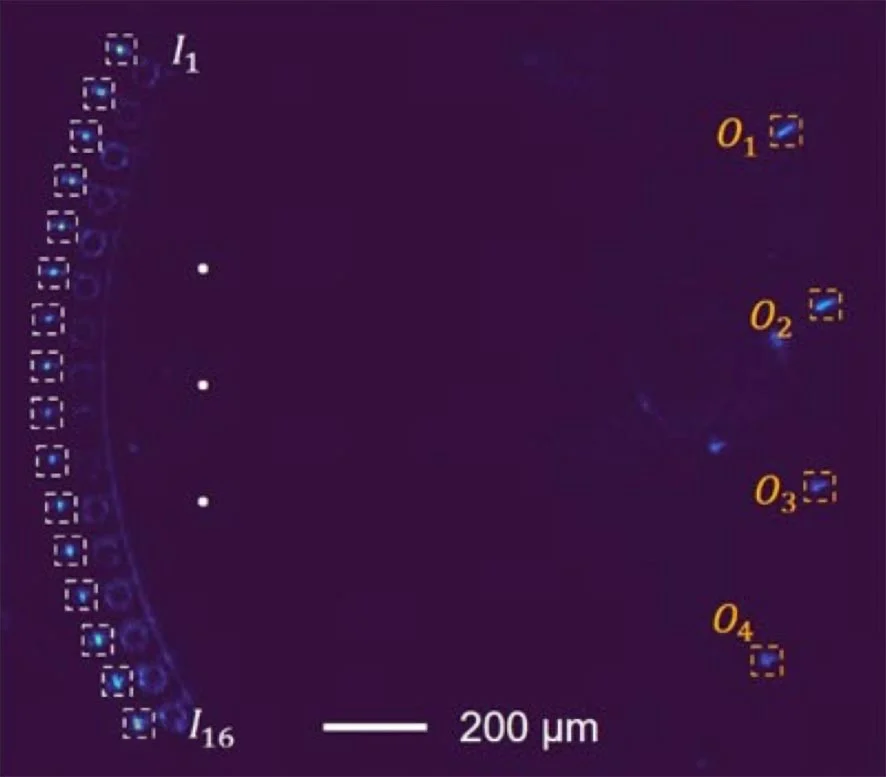

To test the chip’s potential, the team used the chip to solve benchmark AI issues. The platform attained over 97% accuracy on a simple nonlinear decision boundary venture and over 96% on the well known Iris flower dataset — a machine learning standard.

In both instances, the photonic chip matched or outperformed traditional digital neural networks, but used fewer operations, and did now not want power-hungry electronic additives.

In one striking result, simply four nonlinear optical connections at the chip have been equivalent to 20 linear electronic connections with constant nonlinear activation function in a traditional model. That performance hints at what’s possible as the architecture scales.

Unlike preceding photonic systems, which are constant after fabrication, the Penn chip begins as a blank canvas. The pump light acts like a brush, drawing reprogrammable instructions into the material.

“This is a true proof-of-concept for a fields-programmable photonic computer,” stated Feng. “It’s a step towards a future where we are able to train AI at the speed of light.”

Future Directions

While the current work specializes in polynomials — a flexible family of functions broadly used in machine learning — the team believes their approach should allow even more powerful operations within the future, along with exponential or inverse functions. That might pave the manner for photonic systems that tackle large-scale tasks like training large language models.

By replacing heat-producing electronics with low-energy optical components, the platform also guarantees to shrink energy consumption in AI data centers, potentially transforming the economics of machine learning.

“This might be the starting of photonic computing as a critical alternative to electronics,” stated Liang. “Penn is the birthplace of ENIAC, the world’s first digital computer — this chip is probably the first real step toward a photonic ENIAC.”