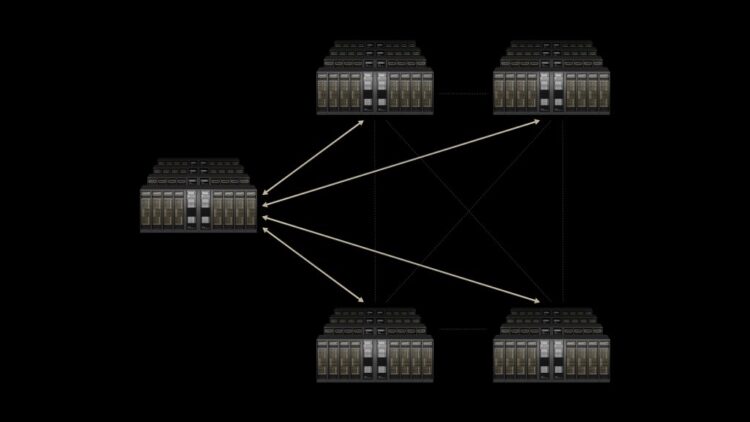

When AI statistics facilities run out of space, they face a high-priced predicament: construct larger centers or find strategies to make more than one places work broadly perfectly. NVIDIA’s recent day range-XGS Ethernet generation promises to solve this venture with the aid of linking AI statistics centres all through long distances into what the corporation calls “giga-scale AI first rate-factories.”

Announced earlier of Hot Chips 2025, this networking innovation describes the enterprise’s solution to a growing problem that’s forcing the AI industry to reconsider how computational strength gets alotted.

The problem: When one constructing isn’t enough

As synthetic intelligence models arise as more sophisticated and difficult, they need high-quality computational energy that often exceeds what any single facility can offer. Traditional AI information centres face restrictions in energy capability, physical area, and cooling capabilities.

When corporations want extra processing strength, they generally ought to construct completely new facilities—but coordinating work among separate places has been difficult due to networking boundaries. The problem lies in popular Ethernet infrastructure, which undergoes from high suspension, unpredictable overall performance changes (known as “jitter”), and inconsistent facts transfer speeds while liking remote places.

These troubles make it tough for AI structures to successfully distribute complex calculations across a couple of websites.

NVIDIA’s solution: Scale-for the duration of technology

Spectrum-XGS Ethernet presents what NVIDIA terms “scale-all through” operational—a 3rd method to AI computing that complements present “scale-up” (making individual processors greater effective) and “scale-out” (including extra processors within the same place) strategies.

The technology incorporates into NVIDIA’s present Spectrum-X Ethernet platform and includes several main innovations:

- Distance-adaptive algorithms that automatically adjust network behaviour primarily based at the bodily distance among centers

- Advanced congestion control that stops data bottlenecks sooner or later of lengthy-distance transmission

- Precision latency management to make sure predictable reaction times

- End-to-end telemetry for real-time network tracking and optimization

Coinciding to NVIDIA’s statement, those enhancements can “almost double the performance of the NVIDIA Collective Communications Library,” which manages conversation among multiple graphics processing devices (GPUs) and computing nodes.

Real-international implementation

CoreWeave, a cloud infrastructure corporation specializing in GPU-improved computing, plans to be the numerous first adopters of Spectrum-XGS Ethernet.

“With NVIDIA Spectrum-XGS, we will link our data centres right into a single, integrated supercomputer, provides our clients get entry to giga-scale AI in an effort to increase breakthroughs throughout every industry,” stated Peter Salanki, CoreWeave’s cofounder and leader technology officer.

This deployment will serve as a noticeable test case for whether the technology can deliver on its promises in actual-global situations.

Industry context and implications

The declaration follows a chain of networking-focused releases from NVIDIA, such as the precise Spectrum-X platform and Quantum-X silicon photonics switches. This sample suggests the organization recognises networking infrastructure as a critical bottleneck in AI development.

“The AI industrial revolution is right here, and large-scale AI factories are the crucial infrastructure,” stated Jensen Huang, NVIDIA’s founder and CEO, in the press release. While Huang’s characterization displays NVIDIA’s advertising angle, the basic venture he describes—the require for more computational capability—is acknowledged throughout the AI industry.

The technology ought to potentially effect how AI statistics centres are planned and operated. Instead of constructing massive single centers that stress neighborhood power grids and actual assets markets, organizations might possibly distribute their infrastructure at some stage in more than one smaller locations whilst maintaining overall performance stages.

Technical concerns and limitations

However, numerous elements may want to affect Spectrum-XGS Ethernet’s realistic effectiveness. Network usual performance for the duration of long distances remains trouble to physical barriers, which include the speed of light and the best of the underlying internet infrastructure among locations. The generation’s achievement will in large component rely upon how well it can work within the ones constraints.

Additionally, the complexity of coping with allotted AI facts centres extends beyond networking to embody statistics synchronisation, fault tolerance, and regulatory compliance at some stage in jurisdictions—stressful situations that networking improvements by alone cannot clear up.

Availability and market impact

NVIDIA states that Spectrum-XGS Ethernet is “available now” as part of the Spectrum-X platform, even though pricing and precise deployment timelines haven’t been disclosed. The technology’s adoption rate will probably rely on price-effectiveness in comparison to opportunity techniques, which incorporates building large single-web page centers or the use of current networking solutions.

The backside line for clients and companies is this: if NVIDIA’s technology works as promised, we ought to see faster AI offerings, greater powerful applications, and probably decrease prices as agencies benefit efficiency through allotted computing. However, if the technology fails to supply in real-international situations, AI organizations will hold dealing with the high-priced preference between building ever-large single centers or accepting overall performance compromises.

CoreWeave’s upcoming deployment will function the primary fundamental check of whether connecting AI facts centres across distances can actually work at scale. The results will probable determine whether or not different agencies comply with healthy or stick with conventional techniques. For now, NVIDIA has supplied a formidable imaginative and prescient—but the AI enterprise remains waiting to look if the fact suits the promise.