An analysis by Epoch AI, a nonprofit AI research institute, suggests the AI industry may not be able to eke high overall performance gains out of reasoning AI models for a good deal longer. As soon as within a yr, development from reasoning models could slow down, in step with the report’s findings.

Reasoning models together with OpenAI’s o3 have caused substantial profits on AI benchmarks in current months, in particular benchmarks measuring math and programming skills. The models can apply more computing to troubles, that could enhance their performance, with the drawback being that they take longer than conventional models to finish tasks.

Reasoning models are evolved by first training a conventional model on a large amount of statistics, then applying a method known as reinforcement learning, which efficiently offers the model “feedback” on its solutions to hard troubles.

So far, frontier AI labs like OpenAI haven’t carried out an vast amount of computing power to the reinforcement learning stage of reasoning model training, consistent with Epoch.

That’s changing. OpenAI has said that it carried out around 10x more computing to train o3 than its predecessor, o1, and Epoch assumes that maximum of this computing was dedicated to reinforcement learning. And OpenAI researcher Dan Roberts these days found out that the company’s future plans call for prioritizing reinforcement learning to use a far more computing power, even more than for the initial model training.

But there’s still an upper bound to how much computing can be implemented to reinforcement learning, per Epoch.

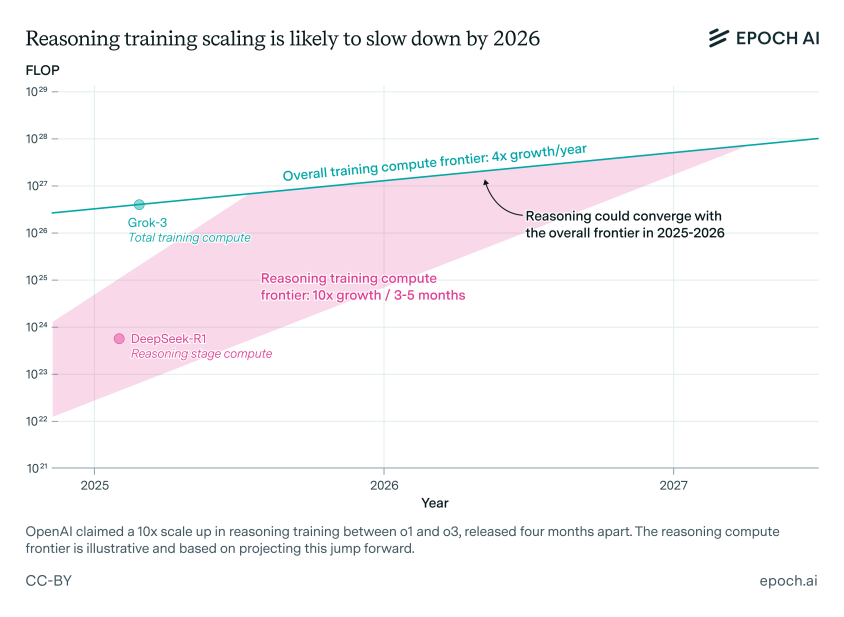

Josh You, an analyst at Epoch and the author of the analysis, explains that overall performance gain from standard AI model training are currently quadrupling every yr, while overall performance gains from reinforcement learning are growing tenfold each 3-5 months. The progress of reasoning training will “in all likelihood converge with the overall frontier by way of 2026,” he continues.

Epoch’s analysis makes a number of assumptions, and attracts in part on public remarks from AI company executives. But it additionally makes the case that scaling reasoning models may additionally prove to be challenging for reasons besides computing, inclusive of excessive overhead cost for research.

“If there’s a persistent overhead fee needed for research, reasoning models won’t scale as far as predicted,” writes You. “Rapid compute scaling is doubtlessly a completely critical ingredient in reasoning model development, so it’s really worth tracking this closely.”

Any indication that reasoning models can also attain some sort of limit in the near future is likely to worry the AI industry, which has invested enormous resources growing these forms of models. Already, research have shown that reasoning models, which can be extraordinarily luxurious to run, have critical flaws, like a tendency to hallucinate greater than certain conventional models.