Researchers at DeepSeek on Monday launched a new experimental model known as V3.2-exp, designed to have dramatically decrease inference prices when used in long-context operations. DeepSeek introduced the model with a post on Hugging Face, also posting a linked academic paper on GitHub.

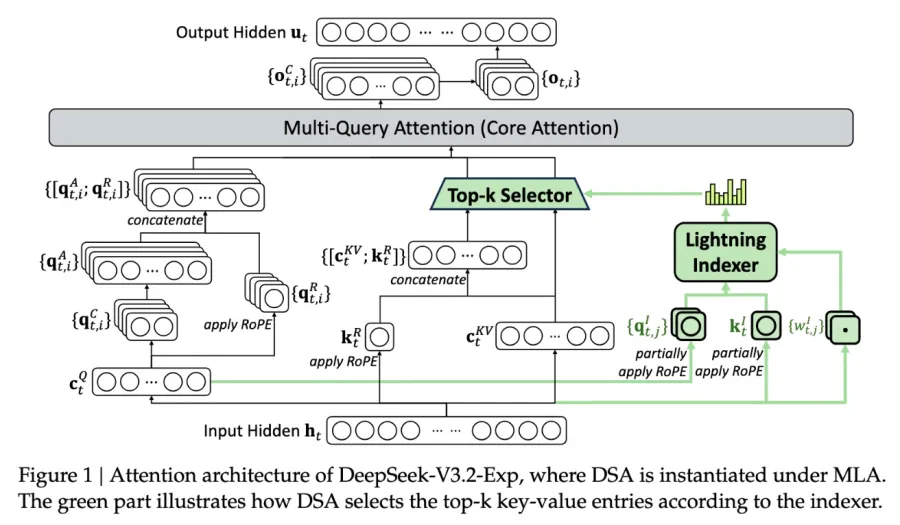

The most improtant feature of the brand new model is referred to as DeepSeek Sparse Attention, an complicated system defined in detail in the diagram below. In essence, the system makes use of a module referred to as a “lightning indexer” to prioritize unique excerpts from the context window. After that, a separate system referred to as a “fine-grained token choice system” chooses unique tokens from the ones excerpts to load into the module’s limited attention window. Taken collectively, they permit the Sparse Attention models to function over long quantities portions of context with relatively small server loads.

For long-context operations, the advantages of the system are significant. Preliminary testing by using DeepSeek determined that the price of a simple API call can be decreased by as much as a lot as half in long-context conditions. Further testing out may be needed to build a more robust assessment, however due to the fact the model is open-weight and freely to be had on Hugging Face, it won’t be long before third-party tests can assess the claims made within the paper.

DeepSeek’s new model is one among a string of latest breakthroughs tackling the trouble of inference costs— importantly, the server costs of operating a pre-trained AI model, as distinct from the cost of training it. In DeepSeek’s case, the researchers have been seeking out ways to make the essential transformer architecture operate more efficiently — and finding that there are great improvements to be made.

Based in China, DeepSeek has been an unusual figure in the AI boom, especially for those who view AI research as a nationalist battle between the U.S. And China. The company made waves at the beginning of the year with its R1 model, trained the usage of mainly reinforcement learning at a far lower value than its American competition. But the model has no longer sparked a wholesale revolution in AI training, as some anticipated, and the company has receded from the highlight in the months since.

The new “sparse attention” method is not likely to provide the same uproar as R1 — but it can still teach U.S. Vendors some much wished tricks to assist keep inference costs low.